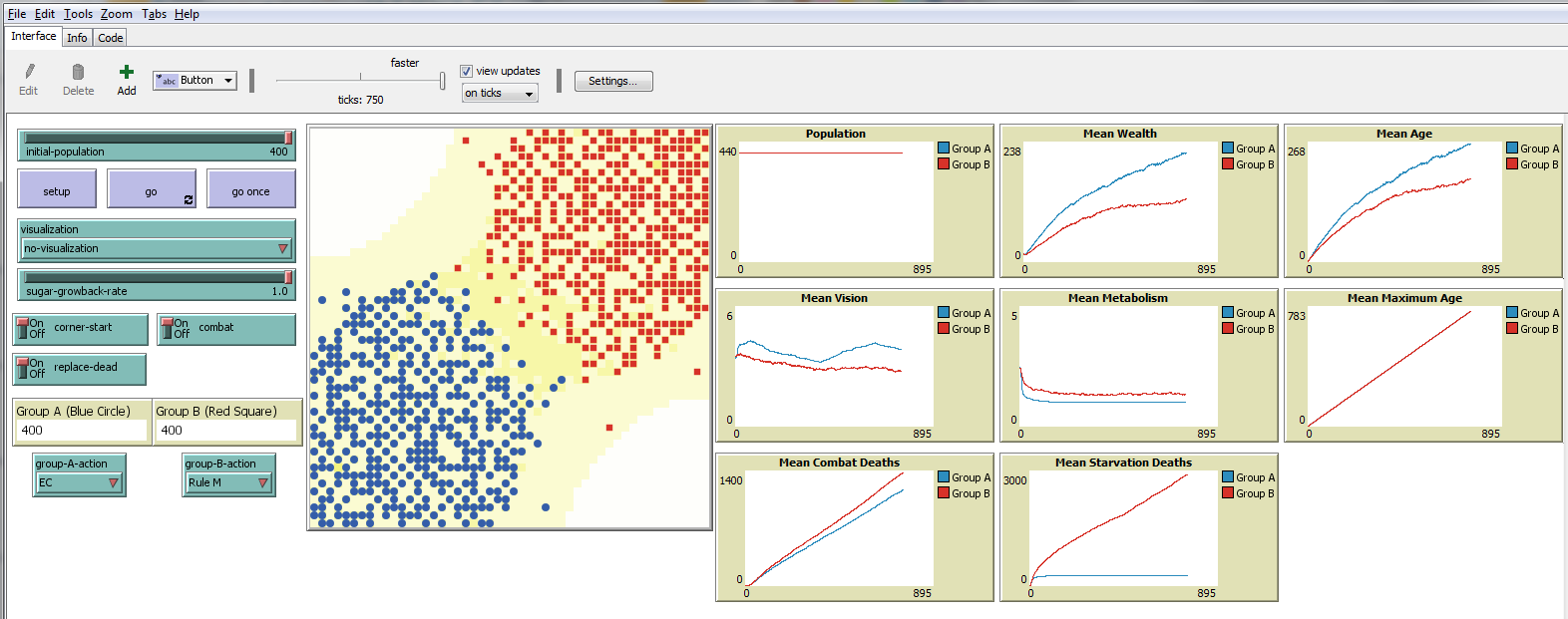

On 8 October 2020 I presented a paper to the Computational Social Science Society of the Americas annual conference CSS 2020. I am the lead author of the paper, "Creating Intelligent Agents: Combining Agent-Based Modeling with Machine Learning" with my co-author, Dr. Andrew Crooks. We used the well-known Sugarscape (Epstein & Axtell, 1996) agent-based model as the basis for examining how reinforcement-learning and evolutionary computing can be used as substitutes for the simple rules normal used in such models. By adding combat to the model, we were able to see how the different methods fared in pairwise competition.

Among our conclusions, we argue that it is worth adding machine learning to agent-based models if there is no other way to achieve your model's goal. Doing so allows agents to learn from good and bad experiences. On the downside is the increase in computational resources needed. Big data and cloud-based computing has increased the availability of such resources, but the cost is still a limitation and wasted time cannot be recovered. Our experience with this model showed that some machine learning methods took seven times longer (wall clock time) to execute than the rule-based method over the same number of time steps.

Dr. Crooks' comments on our paper can be found on his blog. Links to the paper's preprint, model, and conference presentation can be found below.

Abstract

Over the last two decades, with advances in computational availability and power, we have seen a rapid increase in the development and use of Machine Learning (ML) solutions applied to a wide range of applications including their use within agent-based models. However, little attention has been given to how different ML methods alter the simulation results. Within this paper, we discuss how ML methods have been utilized within agent-based models and explore how different methods affect the results. We do this by extending the Sugarscape model to include three ML methods (evolutionary computing, and two reinforcement-learning algorithms (i.e., Q-Learning, and State→Action→Reward→State→Action (SARSA)). We pit these ML methods against each other and the normal functioning of the rule-based method (Rule M) in pairwise combat. Our results demonstrate ML methods can be integrated into agent-based models, that learning does not always mean better results, and that agent attributes considered important to the modeler might not be to the agent. Our paper's contribution to the field of agent-based modeling is not only to show how previous researchers have used ML but also to directly compare and contrast how different ML methods used in the same model impact the simulation outcome. Since this is rarely discussed, doing so will help bring awareness to researchers who are considering using intelligent agents to improve their models.

The model and Overview, Design concepts, and Details (ODD) can both be obtained by selecting the Download Version button on the CoMSES OpenABM site at https://tinyurl.com/ML-Agents.

Cite (paper): Brearcliffe, D., & Crooks, A. (2020). Creating Intelligent Agents: Combining Agent-Based Modeling with Machine Learning (No. 4403). EasyChair.